IROBOT's Genghis Khan now in the Smithsonian

IROBOT's Genghis Khan now in the Smithsonian

Recently, I was asked to write an article on the birth of Scrum by the Cutter Consortium. The first Scrum incubated at Easel Corporation in 1993 and was influenced by the birth of IROBOT's first robot, Genghis Khan.

Sutherland, Jeff (2004)

Agile Development: Lessons Learned from the First Scrum.

Of historical interest is that I joined Easel Corporation in 1993 as VP of Object Technology after spending 4 years as President of Object Databases, a startup surrounded by the MIT campus in a building which housed some of the first successful AI companies.

My mind was steeped in artificial intelligence, neural networks, and artificial life. If you read most of the resources on

Luis Rocha's page on Evolutionary Sytems and Artificial Life you can generate the same mind set.

I leased some of my space to a robotics professor at MIT, Rodney Brooks, for a company now know as

IROBOT Corporation. Brooks was promoting his subsumption architecture where a bunch of independent dumb things were harnessed together so that feedback interactions made them smart, and sensors allowed them to use reality as an external database, rather than having an internal datastore.

Prof. Brooks viewed the old AI model of trying to create an internal model of reality and computing off that simulation as a failed AI strategy that had never worked and would never work. You cannot make a plan of reality because there are too many datapoints, too many interactions, and too many unforseen side effects. This is most obviously true when you launch an autonomous robot into an unknown environment.

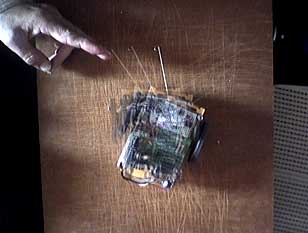

The woman I believe will one day be known as the primieval robot mother by future intelligent robots was also working in my offices giving these robots what looked like emotional behavior. Conflicting lower level goals were harnessed to produce higher goal seeking behavior. The robots were running in and around my desk during my daily work. I asked IROBOT to bring Ghenghis Khan to an adult education course that I was running with my wife (the minister of a local Unitarian Church) where they laid the robot on the floor with eight or more dangling legs flopping loosely. Each leg segment had a microprocessor and their were multiple processors on its spine and so forth. They inserted a blank neural network chip into a side panel and turned it on.

The robot begain flailing like a infant, then started wobbling and rolling upright, staggered until it could move forward, and then walked drunkenly across the room like a toddler. It was so humanlike in its response that it evoked the "Oh, isn't it cute!" response in all the women in the room. We had just watched the first robot learn how to walk.

That demo forever changed the way the people in that room thought about robots, people, and life even though most of them knew little about software or computers.

This concept of a harness to help coordinate independent processors via feedback loops, while having the feedback be reality-based from real data coming from the environment is central to human groups achieving higher level behavior than any individual can achieve on their own. Maximizing communication of essential information between group members actually powers up this higher level behavior.

Around the same time, a seminal paper was published out of the Santa Fe Insitute mathematically demonstrating that evolution proceeds most quickly as a system is made flexible to the edge of chaos. This demonstrated that confusion and struggle was essential to emerging peak performance (of people, or software architectures, both of which are journeys though an evolutionary design space).

On this fertile ground, the Takeuchi and Nonaka paper in Harvard Business Review provided a name, a metaphor, and a proof point for product development, the Coplien paper on the Borland Quattro Product kicked the team into daily meetings, and daily meetings combined with time boxing and reality based input (real software that works) started the process working. The team kicked into a hyperproductive state (only after daily meetings started), and Scrum was born.